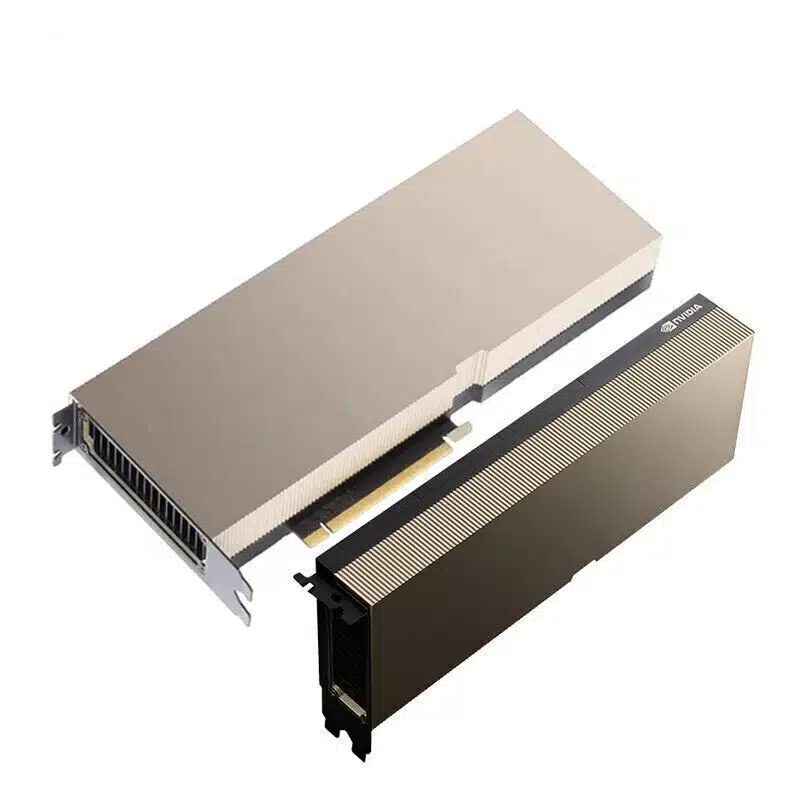

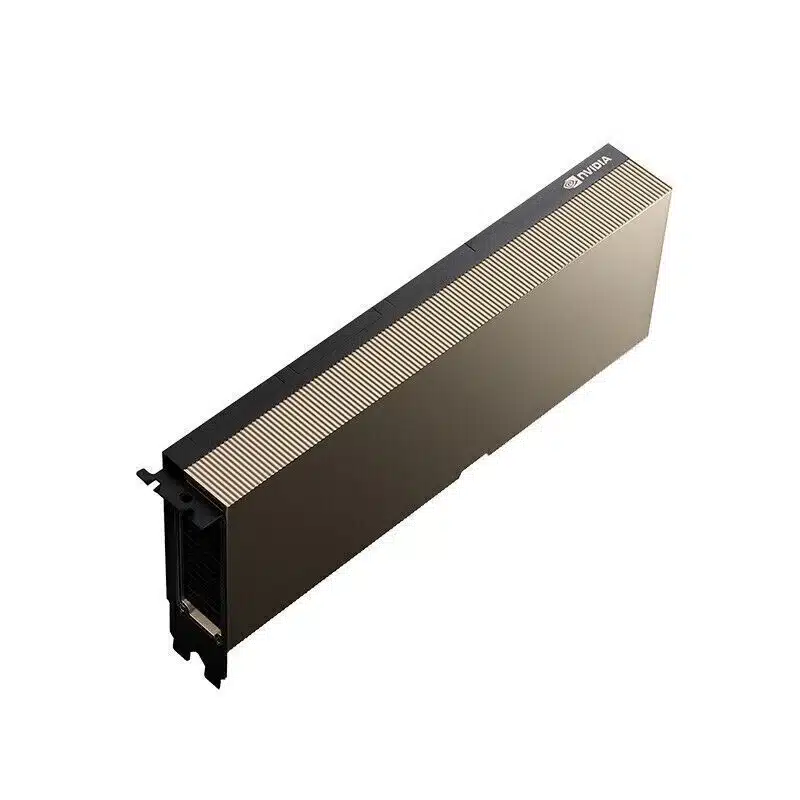

Nvidia Tesla A100 Ampere GPU Accelerator 40GB SXM4 Graphics Card Deep learning

13,900.00 AED

- Sold By : Cloudshare Tech

- Delivery Within 7 Working Days

| Brands | NVIDIA |

|---|---|

| Condition | New open box |

| Warranty | 1 Month |

| Shipping | Delivery Within 7 Working Days |

NVIDIA Tesla A100 40GB SXM4 – The Ultimate GPU Accelerator for AI, Deep Learning & HPC

The NVIDIA Tesla A100 40GB SXM4 is one of the most powerful data-center GPUs ever built, engineered on the groundbreaking Ampere architecture to deliver unmatched acceleration for AI training, deep learning inference, high-performance computing (HPC), and large-scale data analytics. With 40GB of ultra-fast HBM2e memory, the A100 provides exceptional bandwidth, efficiency, and speed for demanding enterprise workloads.

Designed for modern AI infrastructure, supercomputing, and scientific research, the A100 transforms any compatible server into a next-generation performance platform. It dramatically reduces training times, accelerates complex models, and enables parallel processing at massive scale.

Key Features

-

Ampere Architecture – NVIDIA’s most advanced architecture optimized for AI, HPC, and large-scale compute workloads.

-

40GB HBM2e Memory – High-bandwidth memory for processing enormous datasets and deep learning models.

-

Multi-Instance GPU (MIG) – Partition the A100 into up to 7 independent GPU instances for maximum flexibility.

-

Tensor Core Acceleration – Third-generation Tensor Cores delivering the fastest AI training and inference performance available.

-

SXM4 Form Factor – Superior cooling and power efficiency designed for server-grade performance.

-

High Throughput & Scalability – Ideal for multi-GPU server clusters, data centers, and cloud AI environments.

Why Choose the NVIDIA A100?

The Tesla A100 is the industry standard for AI engineers, research labs, cloud providers, and enterprises that require extreme GPU performance. It offers:

-

Up to 20x more performance compared to previous-generation GPUs

-

Exceptionally fast parallel processing for large models and simulations

-

Ultra-reliable performance for 24/7 data-center environments

-

Optimized for TensorFlow, PyTorch, CUDA, RAPIDS, and all modern AI frameworks

-

Future-proof compute power for next-generation AI and ML applications

Whether you’re training massive neural networks, running real-time inference, or performing complex simulation workloads, the A100 gives you the performance foundation needed for breakthrough innovation.

Ideal Use Cases

-

Deep Learning Training & Inference

-

High-Performance Computing (HPC)

-

AI Research & Modeling

-

Scientific Computing & Simulations

-

Big Data Analytics & Enterprise Workloads

-

Cloud Computing & Multi-GPU Clusters

-

Data Center Acceleration

Technical Specifications

-

Architecture: NVIDIA Ampere

-

Memory: 40GB HBM2e

-

Form Factor: SXM4

-

Third-Gen Tensor Cores

-

CUDA Cores: 6912

-

Memory Bandwidth: 1.6 TB/s

-

Multi-Instance GPU: Up to 7 MIG instances

-

NVLink Support: Yes

| Brands | NVIDIA |

|---|---|

| Condition | New open box |

| Warranty | 1 Month |

| Shipping | Delivery Within 7 Working Days |

Based on 0 reviews

|

|

|

0% |

|

|

|

0% |

|

|

|

0% |

|

|

|

0% |

|

|

|

0% |

Reviews

There are no reviews yet.